+- Kodi Community Forum (https://forum.kodi.tv)

+-- Forum: Discussions (https://forum.kodi.tv/forumdisplay.php?fid=222)

+--- Forum: Feature Requests (https://forum.kodi.tv/forumdisplay.php?fid=9)

+--- Thread: VDPAU API for Linux released by NVIDIA today - GPU hardware accelerated video decoder (/showthread.php?tid=40362)

- beefke - 2009-01-04

What I would like to see, is a XBMC with VDPAU support compiled, where you can define for example in advancedsettings.xml (or in a later stadium via a menu setting) if you prefer VDPAU or software decoding (default software)

This would allow to turn hardware decoding off for movies that don't work well with hardware decoding or for setups that don't support it.

- althekiller - 2009-01-04

beefke Wrote:can you try to patch ffmpeg with the patch proposed by Carl Eugen?Link? Only thing I can see that he has done is link nvidia's original mplayer patches to the mplayer-dev list. We need a set of pure ffmpeg patches and fall back to software path is a MUST before considering putting anything in XBMC.

- CapnBry - 2009-01-04

The problem is not that the patches won't apply to the XBMC tree, not that there will be compile errors that will need to be cleaned up, but rather that XBMC software renders to texture then composites the texture to screen via opengl. VDPAU creates what's called a presentation target then hardware decodes frames to that which is bound to an X11 drawable. Compositing OSD in VDPAU requires compositing your own images together and then passing the ready-to-be-drawn image to it for overlay.

Well what's the big fucking deal, images are images and screen is screen right? NO! OpenGL textures used for the OSD are scaled by GL and composited by GL and then merged with the video (which is a texture) by GL and then it is blitted by GL. VDPAU requires that you do your own software texture scaling and blending by hand before shipping it to VDPAU which will blit it to the screen.

Essentially all the UI code and video presentation code in XBMC has to be tossed aside and a whole new system would have to be created because XBMC relies on video hardware to do all that currently. There are two options with various issues:

1) VDPAU can render to X11 pixmap, hey it is a drawable why not. nVidia cards have the extension texture_from_pixmap. We could force VDPAU to render to pixmap, copy it to texture, composite in GL, bob's your uncle = accelerated video decoding. Downside: you lose some of the VDPAU features, like supposedly their vsync code is spot on. There's also a small performance hit for rendering to pixmap as opposed to the screen.

2) Software scale and blend all XBMC graphics to make them available for VDPAU compositing. All the features of VDPAU can be included, however this requires MASSIVE amount of image manipulation in XBMC, negating a majority of the benefit of VDPAU.

So #1 looks like the best option available. When is will it be done? When you submit a diff. Why is someone suggesting users write the code? Because the XBMC devs are the users and the users are the devs. Have you seen how much code goes into SVN every day? If you can't contribute by writing code, you can contribute by not continuously asking for status updates. If you want status updates, watch the SVN log. When you see anything related to VDPAU in the commit log, maybe MAYBE that's when an inquiry is in order.

- beefke - 2009-01-05

CapnBry Wrote:The problem is not that the patches won't apply to the XBMC tree, not that there will be compile errors that will need to be cleaned up

vdpau is now in the ffmpeg svn. So this will be no problem indeed

Quote:Well what's the big fucking deal, images are images and screen is screen right? NO! OpenGL textures used for the OSD are scaled by GL and composited by GL and then merged with the video (which is a texture) by GL and then it is blitted by GL. VDPAU requires that you do your own software texture scaling and blending by hand before shipping it to VDPAU which will blit it to the screen.

I'm no graphical expert, but isn't it possible to pass opengl textures to vdpau?

Quote:1) VDPAU can render to X11 pixmap, hey it is a drawable why not. nVidia cards have the extension texture_from_pixmap. We could force VDPAU to render to pixmap, copy it to texture, composite in GL, bob's your uncle = accelerated video decoding. Downside: you lose some of the VDPAU features, like supposedly their vsync code is spot on. There's also a small performance hit for rendering to pixmap as opposed to the screen.

I think that according to this thread there is no synchronisation (yet) between the copy and the reading into GL, but at least nvidia is aware.

Quote:So #1 looks like the best option available. When is will it be done? When you submit a diff.

I think it is very difficult to start from an existing system without any experience in the code. Certainly not when you have to rewrite the whole graphical system. (I think this would take me ages, while someone who has written it, could do it in a few days)

I'm not asking when someone can submit a patch, but rather what has to change, in what direction we could be going.

Thx for your reply btw, I learned a lot

.

.

- Gamester17 - 2009-01-05

CapnBry Wrote:The problem is not that the patches won't apply to the XBMC tree, not that there will be compile errors that will need to be cleaned up, but rather that XBMC software renders to texture then composites the texture to screen via opengl. VDPAU creates what's called a presentation target then hardware decodes frames to that which is bound to an X11 drawable. Compositing OSD in VDPAU requires compositing your own images together and then passing the ready-to-be-drawn image to it for overlay.Yes, this is all explained further by FFmpeg's developers on their own mailing-list here:

Well what's the big fucking deal, images are images and screen is screen right? NO! OpenGL textures used for the OSD are scaled by GL and composited by GL and then merged with the video (which is a texture) by GL and then it is blitted by GL. VDPAU requires that you do your own software texture scaling and blending by hand before shipping it to VDPAU which will blit it to the screen.

Essentially all the UI code and video presentation code in XBMC has to be tossed aside and a whole new system would have to be created because XBMC relies on video hardware to do all that currently. There are two options with various issues:

1) VDPAU can render to X11 pixmap, hey it is a drawable why not. nVidia cards have the extension texture_from_pixmap. We could force VDPAU to render to pixmap, copy it to texture, composite in GL, bob's your uncle = accelerated video decoding. Downside: you lose some of the VDPAU features, like supposedly their vsync code is spot on. There's also a small performance hit for rendering to pixmap as opposed to the screen.

2) Software scale and blend all XBMC graphics to make them available for VDPAU compositing. All the features of VDPAU can be included, however this requires MASSIVE amount of image manipulation in XBMC, negating a majority of the benefit of VDPAU.

So #1 looks like the best option available. When is will it be done? When you submit a diff. Why is someone suggesting users write the code? Because the XBMC devs are the users and the users are the devs. Have you seen how much code goes into SVN every day? If you can't contribute by writing code, you can contribute by not continuously asking for status updates. If you want status updates, watch the SVN log. When you see anything related to VDPAU in the commit log, maybe MAYBE that's when an inquiry is in order.

https://lists.mplayerhq.hu/mailman/listinfo/ffmpeg-devel/

Especially read the thread with the topic "[FFmpeg-devel] Implementing VDPAU":

http://lists.mplayerhq.hu/pipermail/ffmpeg-devel/2009-January/thread.html#58651

...so unfortunatly is is not as simple as "if FFmpeg supports it then XBMC will also support it"

...so unfortunatly is is not as simple as "if FFmpeg supports it then XBMC will also support it"

- [Ad0] - 2009-01-05

spiff Wrote:i am an INDIVIDUAL and i have EVERY RIGHT to express my PERSONAL opinions. you have absolutely NO right to reduce me to a mere sheep in a flock

Sorry man.

- Deanjo - 2009-01-05

BLKMGK Wrote:Now, I'm not sure who maintains the ffmpeg code in XBMC but whoever it is is probably watching the ffmpeg mailing list and is probably WELL aware of these patches. They are probably playing with them quietly waiting for them to mature or they have a philosophical issue with the closed source stuff and refuse - maybe they just haven't had time.

vdpau has just been accepted in the official ffmpeg tree.

http://git.ffmpeg.org/?p=ffmpeg;a=commit;h=d286159ad7620d37b8bfeacdba25686eb68c5046

EDIT: Whoops see that it was already brought up.

- nipnup - 2009-01-05

Quote:2) Software scale and blend all XBMC graphics to make them available for VDPAU compositing. All the features of VDPAU can be included, however this requires MASSIVE amount of image manipulation in XBMC, negating a majority of the benefit of VDPAU.I'll admit upfront that I do not have much clue about OpenGL, but couldn't the whole OGL stuff simply be handed to Mesa in this case (albeit at a performance hit of course). I seem to remember XBMC even having an option for software rendering already?

If not, how about simply not having an OSD for the time being to get 1080p decoding on an affordable setup? To me, that's a lot more useful than the OSD...

- CapnBry - 2009-01-05

beefke Wrote:I'm no graphical expert, but isn't it possible to pass opengl textures to vdpau?No you can't. VDPAU only takes prescaled RGBA data for compositing. The main reason it doesn't take GL textures (my conjecture) is because the VDPAU presentation surface doesn't have any model/view/projection matrix making it impossible to ascertain where in the source OSD image to take a pixel to put on the screen at a given screen X,Y. The method they went for is to make the mapping 1 input OSD image pixel = 1 output screen image pixel.

But that is good news that VDPAU has gotten accepted into ffmpeg, not that it solves any of XBMC's problem with it though.

- CapnBry - 2009-01-05

nipnup Wrote:I'll admit upfront that I do not have much clue about OpenGL, but couldn't the whole OGL stuff simply be handed to Mesa in this case (albeit at a performance hit of course). I seem to remember XBMC even having an option for software rendering already?As far as XBMC is concerned the whole application is an OSD, since the video can be composited with any window at any time.

If not, how about simply not having an OSD for the time being to get 1080p decoding on an affordable setup? To me, that's a lot more useful than the OSD...

I'm not sure how feasible it is to have the whole app render using MESA to a non-screen render target, reading the image back and then passing it to VPDAU but I'd rather not think about it because it makes me cringe.

- beefke - 2009-01-05

CapnBry Wrote:No you can't. VDPAU only takes prescaled RGBA data for compositing. The main reason it doesn't take GL textures (my conjecture) is because the VDPAU presentation surface doesn't have any model/view/projection matrix making it impossible to ascertain where in the source OSD image to take a pixel to put on the screen at a given screen X,Y. The method they went for is to make the mapping 1 input OSD image pixel = 1 output screen image pixel.

But that is good news that VDPAU has gotten accepted into ffmpeg, not that it solves any of XBMC's problem with it though.

So if I understand you correctly, you would have to put the GL texture on the right position on an opague image (i.e. 1920x1080) and pass that one to vpdau?

- BLKMGK - 2009-01-05

Geez you guys beat me on the acceptance! Note that this was for H.264 only, the MPEG1 and 2 patches are still flying. Not that it helps us much it seems

- CapnBry - 2009-01-06

beefke Wrote:So if I understand you correctly, you would have to put the GL texture on the right position on an opague image (i.e. 1920x1080) and pass that one to vpdau?Yeah you create a 32-bit color bitmap image, resample your source image(s) to be the right size, then blend it to the destination image at the proper memory location. That's not too difficult with, for example ImageLib and a handful of static images, but a lot more code if you're writing a whole advanced skinning engine.

- malloc - 2009-01-06

Could just have GL render to texture of appropriate size and then read the texture to local memory. Of course then we just write it back to the card for VDPAU to use.

- Gamester17 - 2009-01-06

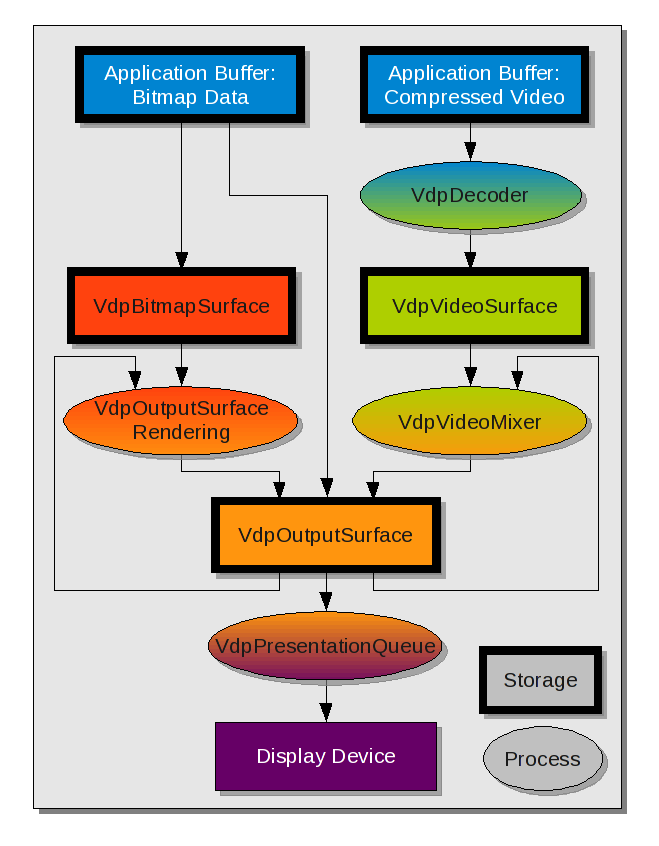

VDPAU data flow chart for reference:

http://http.download.nvidia.com/XFree86/vdpau/doxygen/html/index.html